Performance Can Matter

- Matt Zaske

- April 15, 2019

- 5 minutes

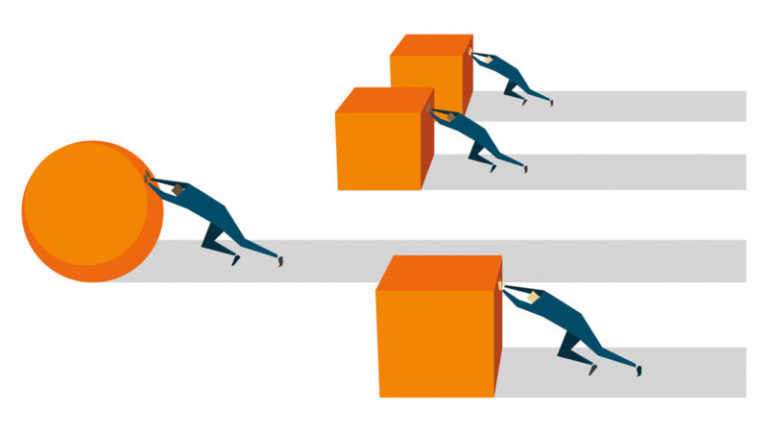

I've had this written down as a topic suggestion for some time, and to balance/counter my post a couple weeks ago regarding performance, there's absolutely another side to that coin.

Some Background

I've written countless database queries throughout my career, and I know for a fact some of them have been...far from superior with regard to performance metrics. I don't claim to be an expert in query performance, but I know what to include and exclude, how to make minor performance tweaks, and perhaps most importantly how to Google. There's always been someone who's solved this problem before...

Once in a while I've chosen to go down the post-processing road and bolt separate query results together rather than make the DB engine do it at the risk of an unintelligible query.

"Any code of your own that you haven't looked at for six or more months might as well have been written by someone else." - Eagleson's law

— Programming Wisdom (@CodeWisdom) March 4, 2019

That being said, combined with my points from gauging if the performance really matters, I can write good code to merge datasets from independent sources (or queries) and include relative comments and other notes in the source to better help future me remember what the hell is actually going on. The process usually involves loading and indexing as necessary individual datasets (JSON, CSV, etc.) into some sort of a multidimensional array, then either building a new file from scratch or appending to a master. It works well when you have to modify the fields or layout of the data to match an import/transport process, and it works really well when you've let the DB engine handle as much of the effort as possible without letting the queries get too gnarly.

An Ask for Assistance

A colleague (now retired, who we'll call Donna) from another campus asked for some assistance in optimizing a query process for a data transport. She knew I'd built out our campus process for the same vendor a handful of years earlier and was having some difficulty pulling a process together. I offered whatever assistance I could.

Donna and I worked in similar roles but had wholly different backgrounds and language/process preferences, so in effect nothing I provided would help her directly but could be modified to her preference and language. We were accomplishing the same task, after all. I gladly shared what I had and after a few weeks heard back from Donna with a few follow-up questions. She was super grateful for the assistance, but couldn't get past the fact her process took two-and-a-half hours to complete. Each time!

Two. And. A. Half. HOURS.

For context (not direct comparison, see above), the process I'd developed took perhaps twenty seconds to complete, DB query to output file. The result file for our campus would have about 2/3 of the record count of Donna's, but certainly that difference wouldn't result in such a scale difference?!

I asked if she'd be willing to share some of her source and queries for a cursory look to see if I could figure out what was so fundamentally different with her process from mine. When she sent the source and I had a few minutes to look, it stuck out like a sore thumb. Right away.

My process uses three distinct queries to generate three different datasets. One dataset is the primary and contains about 7/8 of all the necessary data. The remaining two contain the trailing 1/8 and are often specific one-to-many datasets, which don't "flatten" well against the primary. The flattening is handled in post-processing (to keep the queries more easily understandable). These three datasets contain all qualifying records.

TL;DR: My DB query count totals three (3).

Her queries, on the other hand, were well-nested and run "just in time," meaning that for each primary record there could be up to 26 subsequent queries run for that single record. Further, only the primary identifier was sourced en masse by query, so if she was trying to collect data on 5,000 primary records, she could be running upward of 130,000 distinct queries each time the process ran.

TL;DR: Donna's DB query count was possibly in the hundreds of thousands.

I'm actually kind of amazed that the DB admins weren't asking some serious questions, because a process that runs weekly or daily which pounds on the DB for two-and-a-half ours each time would certainly throw some flags for me. Maybe I wasn't getting the whole story. It doesn't really matter, though, because I had found a relatively simple solution for her.

The JOIN for the Win!

I quickly mentioned to Donna that about twenty of the 26 subqueries could effectively be consolidated into one much more efficient query by way of the SQL JOIN. Left JOIN to be more specific. Even if the JOIN were run once on every primary record, this could cut down the DB pounding from hundreds of thousands to at least a much more manageable few tens of thousands. More ideally, consolidating the query for the primary records would be the biggest win, but that might involve her having to rewrite some other components not shared with me. Options existed, and I'm glad I was able to help identify some solutions.

Ultimately, though, by understanding and leveraging the SQL JOIN this performance would be improved. Even if not perfect, at least it'd keep you out of the 'enemies' category with the DBAs.

Donna was most grateful and had some follow-up questions about process. I never did really get a good feel as to how far she'd gone with regard to consolidating queries, but I'm convinced their process in production doesn't take over two hours to run anymore, which was the goal (some sort of improvement).

Performance can matter. And if something doesn't seem right, I encourage everyone to ask around for a second set of eyes.